Project 4A: Image Warping and Mosaicing

"When a shape stirs, it begets not a shape but a shadow."

—Lieh-Tzu (Graham trans.)

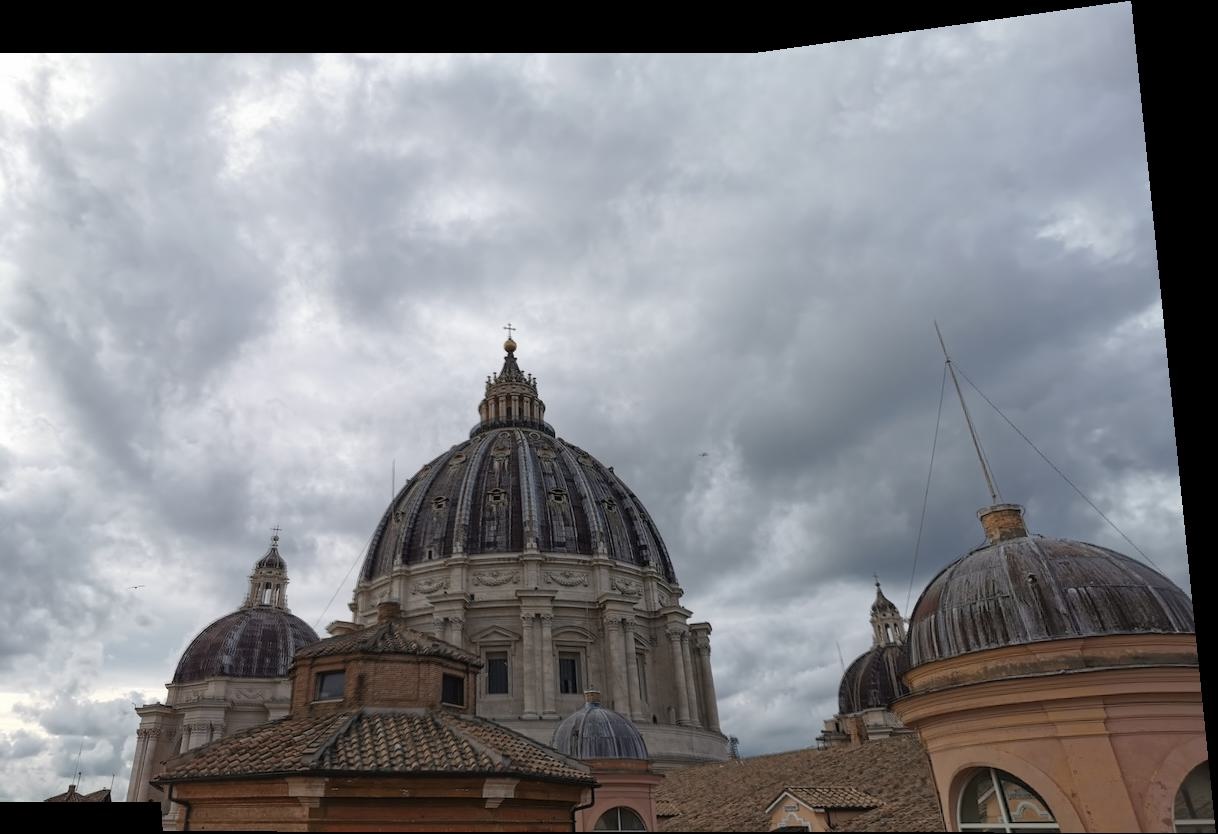

Part 1: Shoot and digitize pictures

We take several sets of photographs where, within each set, the images are approximately related to each other by camera rotation.

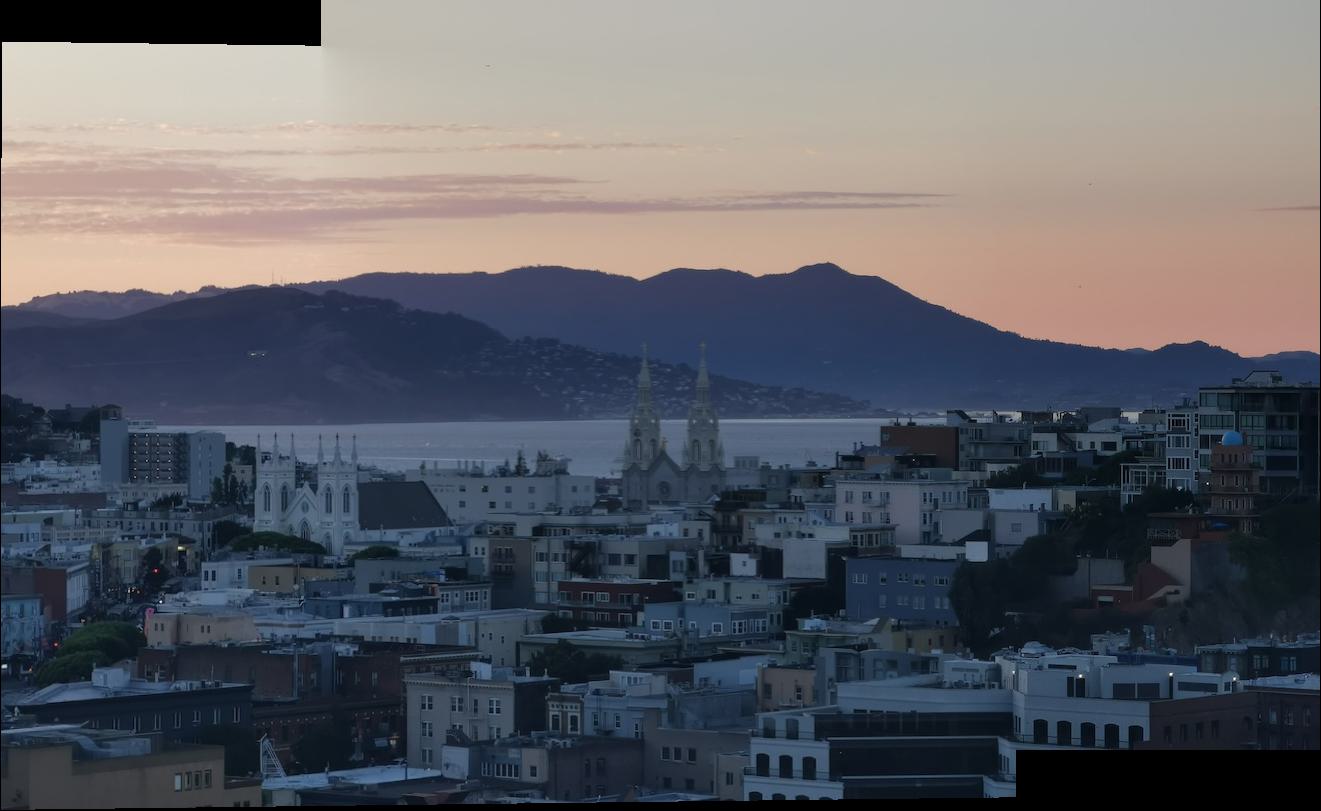

Some examples are a sunset view of San Francisco (Fig. 1a), the interior of the Palace Hotel in San Francisco (Fig. 1b), and St. Peter's Basilica in the Vatican City (Fig. 1c).

| Legend | Images |

|---|---|

Fig. 1a. sf.

|

|

Fig. 1b. palace.

|

|

Fig. 1c. vatican.

|

|

Part 2: Recover Homographies

Given a pair of images assumed to be related by a homography, we can estimate the transformation by finding corresponding points (e.g., using a labeling tool developed by a previous student; Fig. 2).

In particular, let the homography be parameterized by the matrix \(H=\begin{pmatrix}h_0&h_1&h_2\\h_3&h_4&h_5\\h_6&h_7&1\end{pmatrix}\) that acts on homogeneous coordinates.

Given a pair of points \(p_1=\begin{pmatrix}x_1\\y_1\\1\end{pmatrix}\), \(p_2=\begin{pmatrix}x_2\\y_2\\1\end{pmatrix}\) in the two images,

a perfect homography transformation should satisfy the equations

\(H p_1 = wp_2\), where \(w\) is a scalar. Expanding the matrix multiplication and simplifying, we can rewrite the constraints as

\[

\begin{pmatrix}

x_1 & y_1 & 1 & 0 & 0 & 0 & -x_1 x_2 & -y_1 x_2\\

0 & 0 & 0 & x_1 & y_1 & 1 & -x_1 y_2 & -y_1 y_2

\end{pmatrix}

\begin{pmatrix}

h_0\\h_1\\h_2\\h_3\\h_4\\h_5\\h_6\\h_7

\end{pmatrix}

=

\begin{pmatrix}

x_2\\y_2

\end{pmatrix}

\]

In general, we can define more than four pairs of corresponding points, obtain an overconstrained system of equations (namely, a stack of pairs of equations of the above form), and solve for an approximate homography using least squares.

| Fig. 2. Example corresponding points. |

|---|

|

Part 3: Warp the images

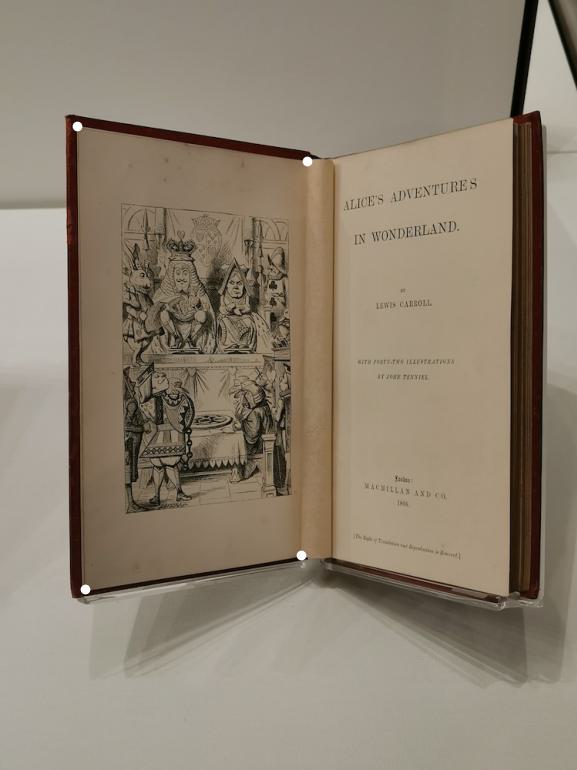

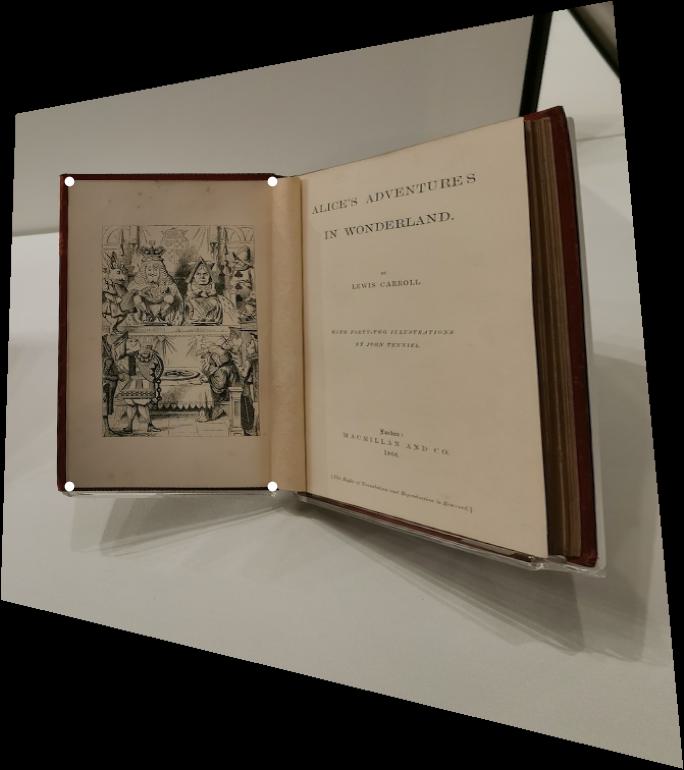

Given a homography, we can transform one image to another camera projection through inverse warping. In particular, we can rectify an image by warping a quadrilateral to a rectangle (Fig. 3a-c).

| Legend | Original | Rectified |

|---|---|---|

Fig. 3a. alice.

|

|

|

Fig. 3b. campbell.

|

|

|

Fig. 3c. cablecar.

|

|

|

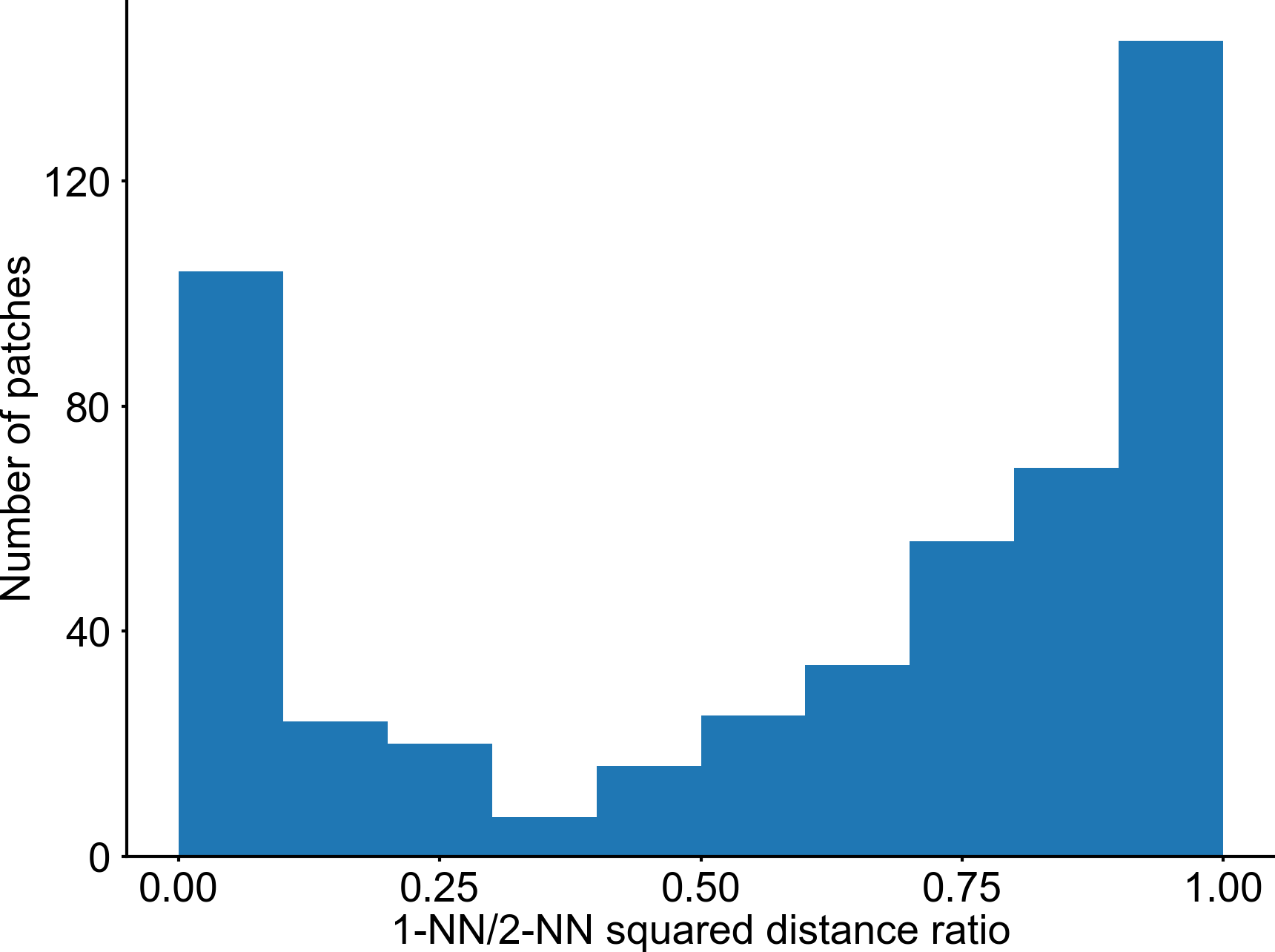

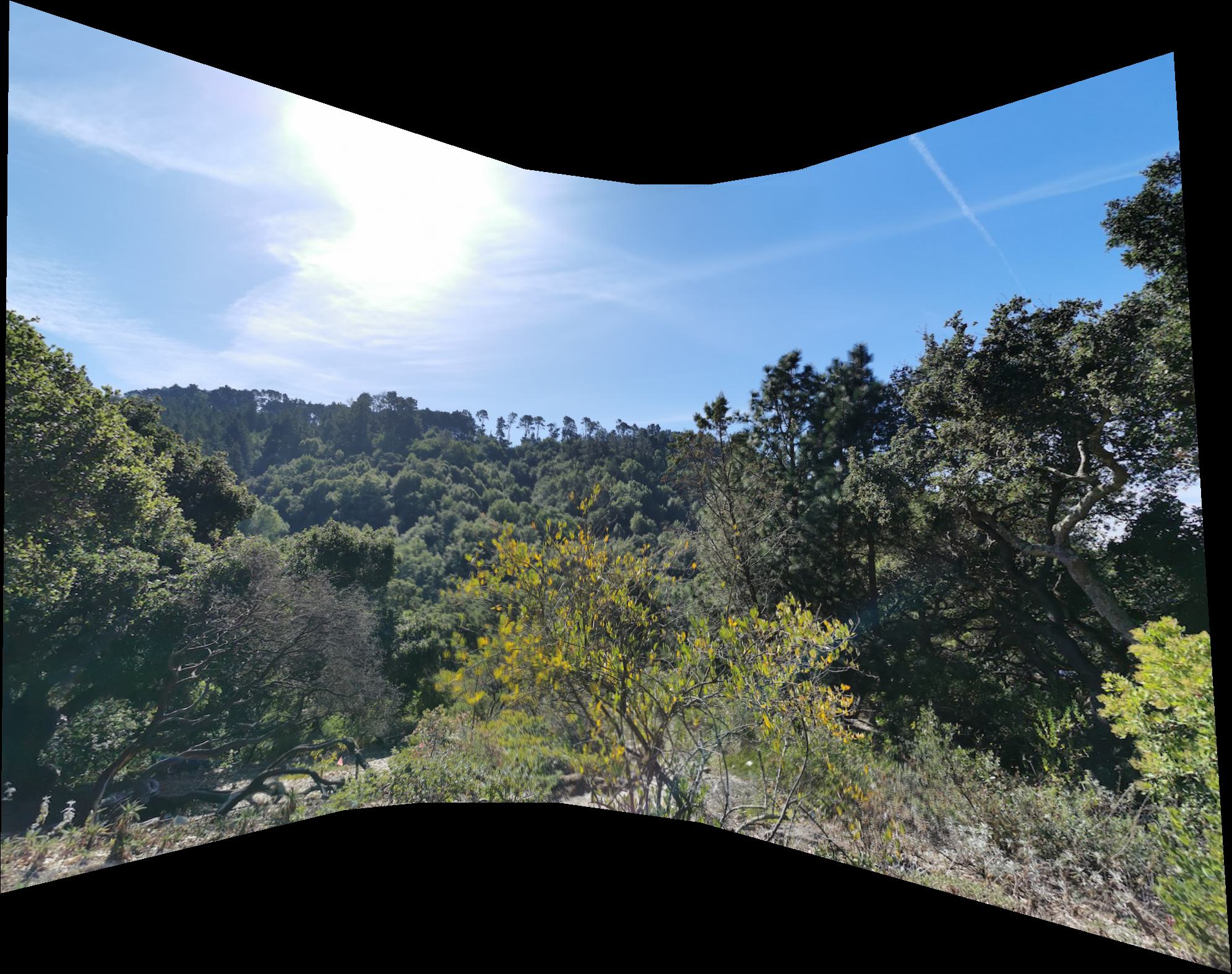

Part 4: Blend images into a mosaic

To create a mosaic, we transform each image in the set to a common projection (e.g., one of the images). For far apart images that do not have direct corresponding points, we can compute the composition of several homographies using intermediate images.

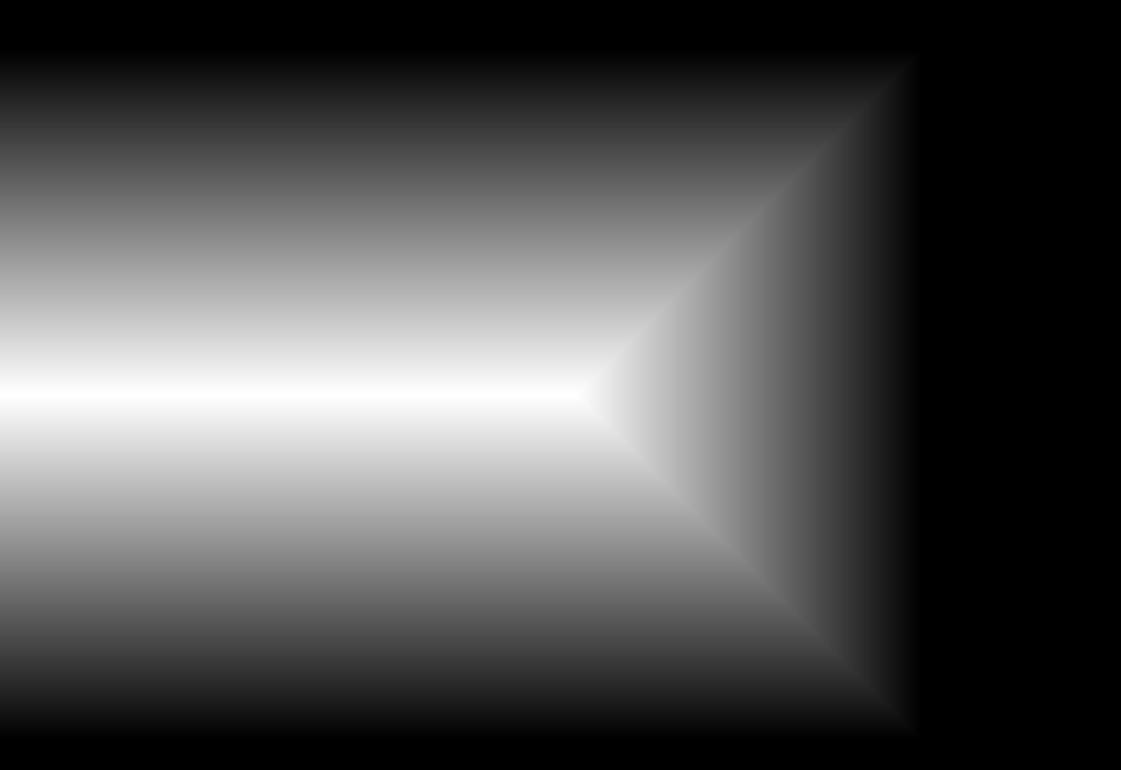

We use a weighted average of the pixel values of images that overlap at a given location (after transforming each individual image). In particular, for each pixel \(x=(i,j)\), let

\[\alpha_{x}^{(n)}=\frac{d_{x}^{(n)}}{\sum_{n}d_{x}^{(n)}}\]

Where \(\alpha_{x}^{(n)}\) is the weight of the \(n\)th image for this pixel, and

\[d_{x}^{(n)}=\begin{cases}\inf_{p\in \partial (H_nI_n)}\|p-x\|_2,\ x\in H_nI_n\\ 0,\ x\not\in H_nI_n\end{cases}\]

Is the Euclidean distance of the pixel to the boundary \(\partial(\cdot)\) of the transformed image \(I_n\) under homography \(H_n\). Example distance transforms are shown in Fig. 4.

Fig. 4a. \(d\) for vatican_1. |

Fig. 4a. \(d\) for vatican_2. |

|---|---|

|

|

We can then take the weighted average of the pixel values of the images at each pixel location to create the final mosaic (Fig. 5a-c).

Fig. 5a. sf composite. |

Fig. 5b. palace composite. |

Fig. 5c. vatican composite. |

|---|---|---|

|

|

|